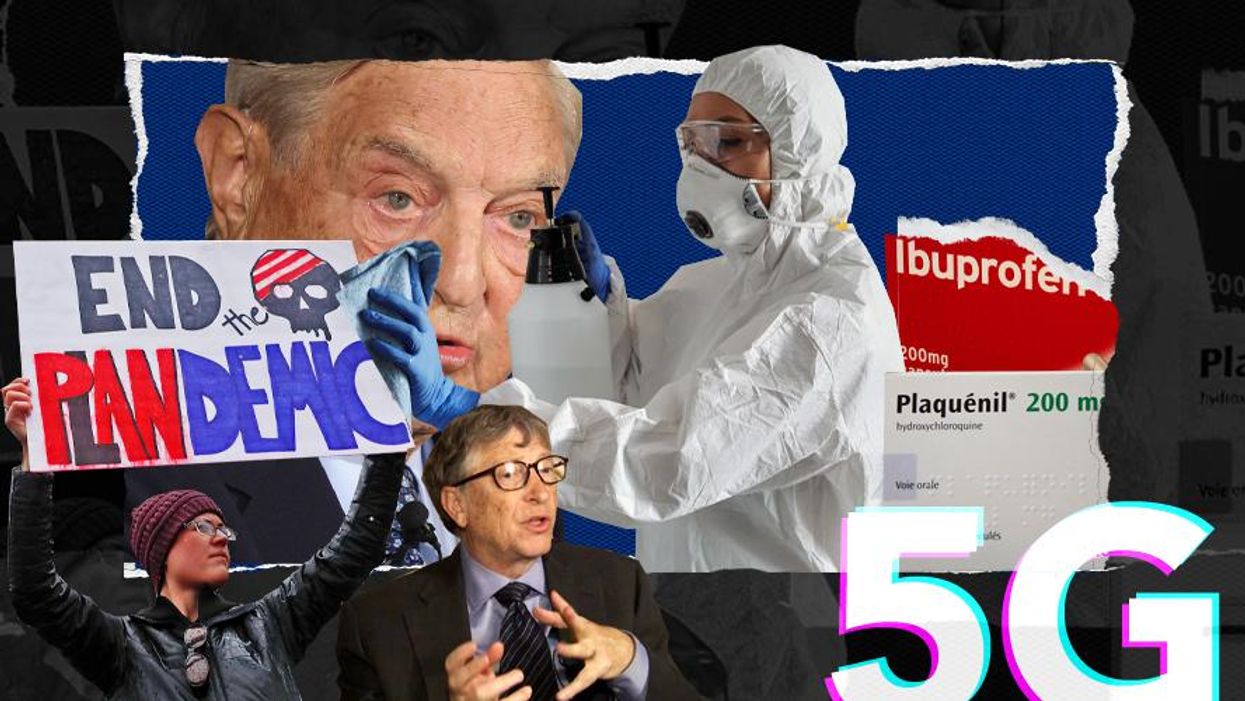

In 2021, social media companies failed to address the problem of dangerous COVID-19 lies and anti-vaccine content spreading on their platforms, despite the significant harm it caused users. Along with enabling this content to spread, some platforms profited from the dangerous misinformation, all while making hollow promises that prioritized positive news coverage over true accountability.

Many platforms instituted toothless moderation policies while letting propaganda encouraging distrust of the vaccine, science, and public health institutions run rampant. Media Matters researchers easily found content promoting dangerous fake cures for COVID-19, conspiracy theories about the virus’s origins and the safety of vaccines, and more on major social media networks throughout the year. Some platforms profited from this content, while others helped anti-vaccine influencers gain followings and monetize their misinformation in other ways.

This abundance of low quality or misleading information was not inevitable. The features that have come to define social media platforms — features that facilitate monetization, promote rapid content sharing, and encourage user engagement — accelerated and fostered misinformation about COVID-19 and the vaccines. And this misinformation has resulted in real and irreversible harms, like patients dying from COVID-19 yet still refusing to believe they have the illness. Social media companies could have taken action to mitigate the issues brought on by their platforms, but they did not, despite repeated warnings and demands for change.

Facebook

Throughout 2021, Facebook (now Meta) repeatedly failed to control the spread of egregious vaccine misinformation and other harmful COVID-19 lies, which were prevalent in the platform’s pages, public and private groups, comments, and ads.

Public pages remained a bastion of anti-vaccine misinformation

As shown in multiple previous Media Matters reports, right-leaning pages on Facebook earn more interactions than ideologically nonaligned or left-leaning pages, despite conservatives’ claims of censorship. In fact, right-leaning pages earned roughly 4.7 billion interactions on their posts between January 1 and September 21, while left-leaning and ideologically nonaligned pages earned about 2 billion and 3 billion, respectively.

In the past year, right-wing pages shared vaccine misinformation with little moderation or consequence from Facebook. Even when the pages were flagged or fact-checked, users found ways around Facebook’s Band-Aid solutions to continue pushing dangerous medical misinformation.

Right-wing figures such as Fox host Tucker Carlson and Pennsylvania state Sen. Doug Mastriano have used the platform to push anti-vaccine talking points and/or lie about the origins of the coronavirus.

In fact, vaccine-related posts from political pages this year were dominated by right-wing content. Right-leaning pages earned a total of over 116 million interactions on vaccine-related posts between January 1 and December 15, accounting for 6 out of the top 10 posts. Posts from right-leaning pages that dominated the vaccine discussions on Facebook included:

Third post in the top 10, with about 300,000 interactions:

Fifth post in the top 10, with over 266,000 interactions:

Private and public groups sowed some of the most dangerous discourse

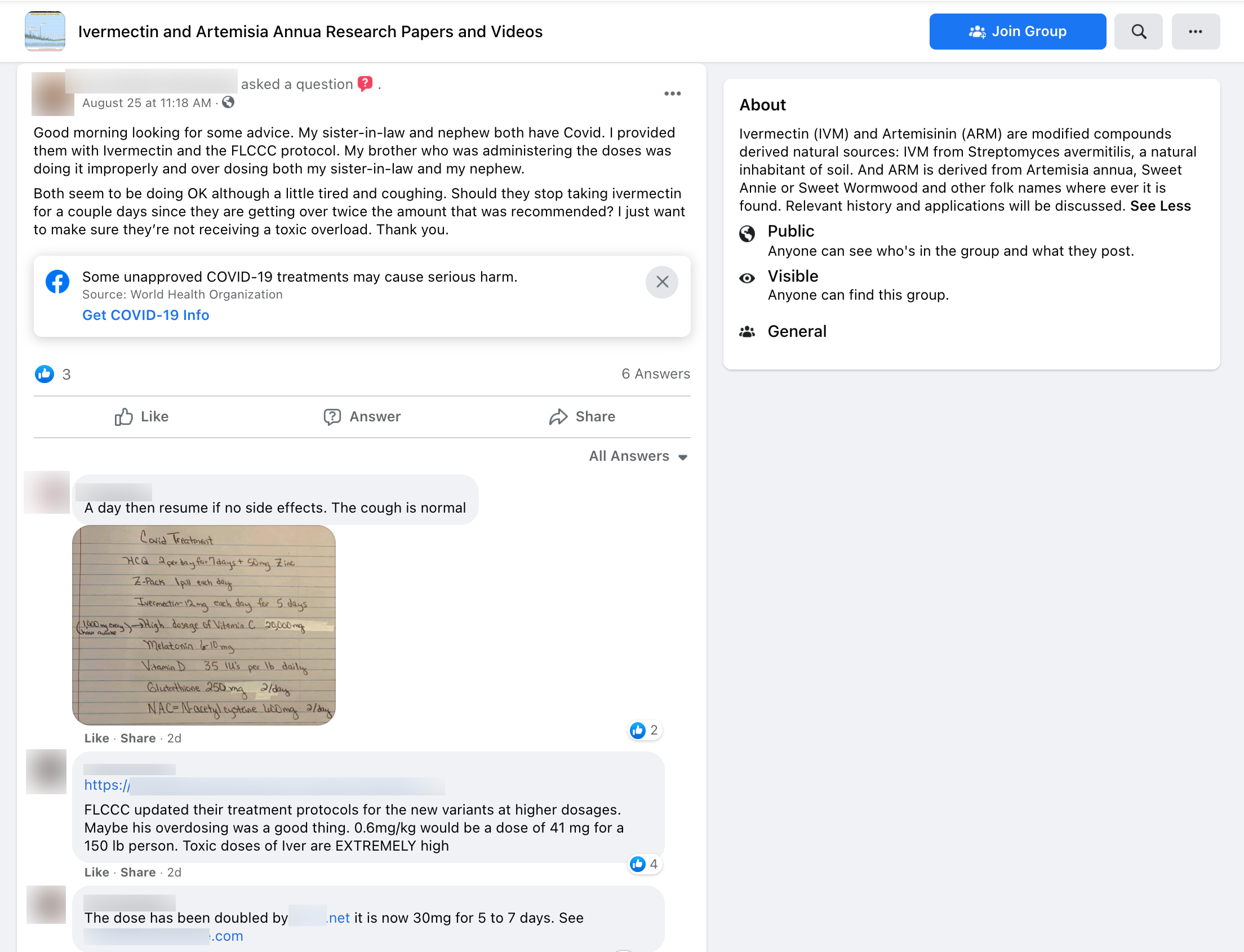

Groups on Facebook were also rife with harmful COVID-19 lies -- including dismissing the severity of COVID-19, promoting dangerous alternative treatments, and sharing baseless claims about the vaccine. In August, Media Matters reported on Facebook groups promoting the use of ivermectin as a prophylactic or treatment for COVID-19, even as government officials warned against it. As of the end of September, there were still 39 active ivermectin groups with over 68,000 members.

Media Matters has repeatedly identified anti-mask, anti-vaccine, and other similar groups dedicated to spreading COVID-19 and vaccine misinformation. Yet Facebook has failed to remove these groups, even though they appear to violate the platform’s policies.

In October, we identified 918 active groups that were dedicated to promoting COVID-19 and vaccine misinformation, with over 2 million combined members. These included groups discussing misleading and false stories of vaccine side effects and conspiracy theories on what is in the vaccine. We also recently identified at least 860 “parental rights” groups dedicated to opposing school policies around LGBTQ rights, sex education, and so-called “critical race theory,” and other culture war issues — including at least 180 groups that promote explicit COVID-19, mask, or vaccine misinformation.

Comment sections continued to be a toxic part of Facebook, especially as users found ways to use them to evade Facebook’s ineffectual fact-checking and moderation efforts. Group administrators encouraged this behavior, asking members to put more extreme content in the comments and to use code words instead of “vaccine” or “COVID” to thwart moderation.

What’s worse, Facebook reportedly knew COVID-19 and vaccine misinformation was spreading in its comment sections and did little to prevent it.

Facebook continued to enjoy increased profits as misinformation spread on its platform

During all of this, Facebook has enjoyed increased profits -- including from ads promoting fringe platforms and pages that push vaccine misinformation. Media Matters found that Facebook was one of the top companies helping COVID-19 misinformation stay in business, and that it was taking a cut itself. Even after a federal complaint was filed against a fake COVID-19 cure circulating on Facebook (and Instagram), the platform -- against its own policy -- let it run rampant, generating profit.

Throughout 2021, Media Matters has followed how Facebook has enabled the spread of harmful COVID-19 lies, extremism, and more.

Instagram

Though often overshadowed by Facebook, Instagram — which is also owned by Meta — has similarly established itself as a conduit for dangerous lies, hate, and misinformation.

In 2021, there was no better example of Instagram’s shortcomings than its inability to stop the spread of anti-vaccine misinformation -- despite Instagram head Adam Mosseri’s persistent claims that the company takes vaccine-related misinformation “off the platform entirely.”

Insufficient moderation and consistent ban evasion by misinformers

In March, Media Matters found that despite Instagram’s ban on anti-vaccine content, anti-vaccine influencers earned tens of thousands of interactions by falsely claiming that the newly available COVID-19 vaccines were “dangerous,” and in some cases by claiming the shot was killing thousands of people.

A month later, Instagram removed several of the anti-vaccine accounts highlighted in our research, including several members of the so-called “Disinformation Dozen,” influencers the Center for Countering Digital Hate identified as the originators of an estimated 65 percent of vaccine misinformation spread on Facebook and Twitter between February 1 and March 16 of this year. Within days of the accounts’ removal, many of them were back on the platform, using ban evasion tactics.

Today you can still find accounts associated with seven members of the Disinformation Dozen and scores of similarly inclined influencers active on the platform. Practically speaking, not much has changed, despite Instagram’s ban on anti-vaccine content.

Instagram’s recommendation algorithm pushes users down anti-vaccine rabbit holes

In addition to allowing violative content to flourish, the platform’s algorithms also push users down anti-vaccine and health misinformation rabbit holes. In October, a Media Matters study found that Instagram’s suggested-content algorithm was actively promoting anti-vaccine accounts to users who demonstrated an interest in such content.

Similarly, the Center for Countering Digital Hate found that Instagram’s “Explore” page not only funneled users toward anti-vaccine posts, but also led them to other extreme content espousing the QAnon conspiracy theory and antisemitism, for instance.

Others who engaged with the platform have stumbled upon the same phenomenon: If a user demonstrates interest in extreme content, the algorithm feeds them more of it.

Instagram’s monetization features present unique dangers

As the company expands its e-commerce ambitions, bad actors are already abusing the platform's monetization features to finance dangerous propaganda. Instagram Shopping, which debuted in 2020, is filled with anti-vaccine merchandise. Pro-Trump businessman Mike Lindell and right-wing agitator Jack Posobiec teamed up to use the platform’s new link sticker feature — which allows users to link directly to external websites — to finance their crusade to undermine faith in American democracy.

Again and again, Instagram commits to addressing harmful content on its platform, but either fails to do so effectively, or waits until it’s way too late.

TikTok

In 2021, TikTok was used as an anti-mask organizing space and a launching pad for COVID-19 and vaccine misinformation. While the policies TikTok designed in response to the pandemic were strong on paper because they specifically addressed combating medical misinformation, the company has failed to meaningfully enforce them.

TikTok fails to proactively moderate dangerous medical misinformation

A large part of TikTok’s misinformation crisis comes from its moderation practices, which appear to be largely reactive. Although the company has removed some COVID-19 misinformation when highlighted by researchers or journalists, it has fundamentally failed to meaningfully preempt, detect, and curb health misinformation narratives before they go viral.

There is no excuse for a multibillion-dollar company behind the most downloaded social media app to have such insufficient moderation practices, especially when medical misinformation can seriously harm its users.

TikTok’s recommendation algorithm fed users COVID-19 and vaccine misinformation

Not only did TikTok fail to stop the spread of dangerous misinformation, but the company’s own recommendation algorithm also helped propelled COVID-19 and vaccine falsities into virality -- hand-delivering harmful medical misinformation to unsuspecting users.

TikTok’s major appeal is its “For You” page (FYP), a personalized feed of videos individually tailored to each user. When COVID-19 misinformation goes viral, it’s often because TikTok’s algorithm feeds users this content on their FYP. Media Matters identified multiple instances of TikTok's own algorithm amplifying COVID-19 and vaccine misinformation. In our study, 18 videos containing COVID-19 misinformation — which at the time of the study had garnered over 57 million views — were fed to a Media Matters research account's FYP.

TikTok’s unregulated conspiracy theory problem creates a gateway to medical misinformation

The spread of conspiracy theories and misinformative content on TikTok has created a pipeline from other false or harmful content to medical misinformation. Vaccine skepticism is tied to belief in conspiracy theories, which has long proliferated on the platform. Media Matters identified repeated circulation of videos from Infowars, a far-right conspiratorial media outlet, including those in which Infowars founder Alex Jones spreads COVID-19 misinformation.

Media Matters also found evidence of a gateway between conspiracy theory accounts and the spread of COVID-19 misinformation, as well as content promoting other far-right ideologies. In one instance, we followed a flat earth conspiracy theory account and TikTok’s account recommendation algorithm prompted us to follow an account pushing COVID-19 misinformation.

YouTube

In 2020, Media Matters documented YouTube’s repeated failure to enforce its own policies about COVID-19 misinformation. In 2021, the platform continued to allow this type of content to spread, despite its announcement of an expanded medical misinformation policy.

In September, well over a year into the pandemic, YouTube finally updated its policies around vaccine-related misinformation. However, these changes came too late, after videos such as the Planet Lockdown series collected at least 2.7 million views while on the platform. In the months following the policy expansion, YouTube’s enforcement of the new policies proved to also be far too little.

YouTube has failed to enforce its guidelines since early in the pandemic

Prior to the policy updates in September, Media Matters documented YouTube’s failure to sufficiently enforce its existing guidelines around COVID-19 misinformation. For example, the platform allowed right-wing commentator Charlie Kirk to baselessly speculate that 1.2 million people could have died from the COVID-19 vaccine. The platform also failed to remove numerous videos promoting deceptive claims about the use of ivermectin to treat COVID-19. (Since the original publication of the linked article, YouTube has removed three of the videos. The rest remain on the platform.) YouTube also hosted a two-hour live event featuring prominent anti-vaccine figures such as Robert F. Kennedy Jr.

Even after its September 2021 policy expansion, YouTube still fell short

After months of letting anti-vaccine and COVID lies flourish, YouTube announced in a blog post that it was expanding its policies because it was seeing “false claims about the coronavirus vaccines spill over into misinformation about vaccines in general.”

The blog stated that the platform would prohibit “content that falsely alleges that approved vaccines are dangerous and cause chronic health effects, claims that vaccines do not reduce transmission or contraction of disease,” and content that “contains misinformation on the substances contained in vaccines.”

After renewing its commitment to combating medical misinformation, the Alphabet Inc.-owned platform enjoyed a wave of mostly positive press. However, less than a week after this announcement was made, Media Matters uncovered numerous instances where enforcement of these new policies was falling short.

Despite banning individual accounts, YouTube allowed prominent anti-vaccine figures featured among the Disinformation Dozen to continue to spread misinformation on the platform. Recently, several of the videos were finally removed, but only after accumulating more than 4.9 million views.

Media Matters also found that YouTube allowed numerous videos promoting ivermectin to remain on the site following the new policy debut, and also permitted advertisements for the drug, some of which promoted it as an antiviral for human use.

Additionally, we identified a YouTube video from right-wing group Project Veritas claiming to show a “whistleblower” exposing harms caused by the COVID-19 vaccine. The video, which provides no real evidence or context, accumulated millions of views despite violating YouTube’s updated guidelines.

In 2021, YouTube has repeatedly failed to enforce its own policies. In addition to hosting ample misinformation about COVID-19 and vaccines, the platform has profited from recruitment videos for a militia that has been linked to violence and election fraud lies. It has allowed right-wing propaganda network PragerU to fundraise while spreading transphobia, and is still falling short on its promise to crack down on QAnon content.

Methodology

Media Matters used the following method to compile and analyze vaccine-related posts from political pages on Facebook:

Using CrowdTangle, Media Matters compiled a list of 1,773 Facebook pages that frequently posted about U.S. politics from January 1 to August 25, 2020.

For an explanation of how we compiled pages and identified them as right-leaning, left-leaning, or ideologically nonaligned, see the methodology here.

The resulting list consisted of 771 right-leaning pages, 497 ideologically nonaligned pages, and 505 left-leaning pages.

Every day, Media Matters also uses Facebook's CrowdTangle tool and this methodology to identify and share the 10 posts with the most interactions from top political and news-related Facebook pages.

Using CrowdTangle, Media Matters compiled all posts for the pages on this list (with the exception of UNICEF – a page that Facebook boosts) that were posted between January 1 and December 15, 2021, and were related to vaccines. We reviewed data for these posts, including total interactions (reactions, comments, and shares).

We defined posts as related to vaccines if they had any of the following terms in the message or in the included link, article headline, or article description: “vaccine,” “anti-vaccine,” “vaxx,” “vaxxed,” “anti-vaxxed,” “Moderna,” “Pfizer,” “against vaccines,” “pro-vaccines,” “support vaccines,” “vax,” “vaxed,” “anti-vax,” “pro-vaccine,” “pro-vaxx,” or “pro-vax.”

Article reprinted with permission from Media Matters